Effective Testing - Reducing Non-determinism to Avoid Flaky Tests

Flaky tests are those that randomly fail for no apparent reason. If you have a flaky test, you might re-run it, over and over, until it succeeds. If you have a couple of them, the chances of all passing at the same time are slim, so maybe you ignore the failures. You know, just this one time… Soon enough, you’re not paying attention to failures on this test suite. Congratulations! Your tests are now worthless.

Prefer smaller tests

Non-determinism is often introduced as a consequence of relying on external services. For example, let’s say our test needs to read data from a database, the test might fail if the database is down, or the data is not present, or has changed.

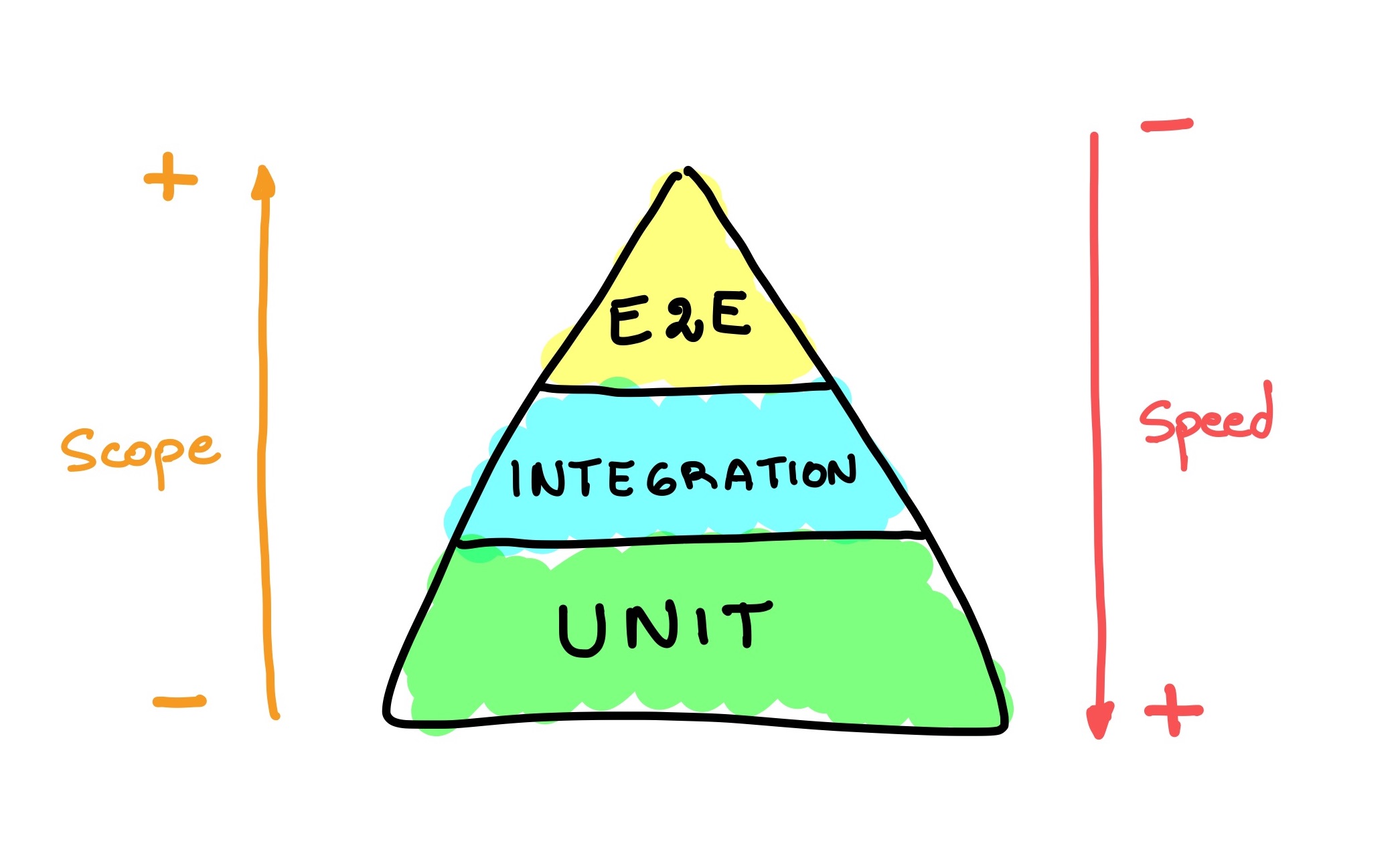

You’ve probably seen the Test Pyramid before. Tests are classified by scope, and the recommendation is to favor tests with reduced scopes (i.e. Unit Tests).

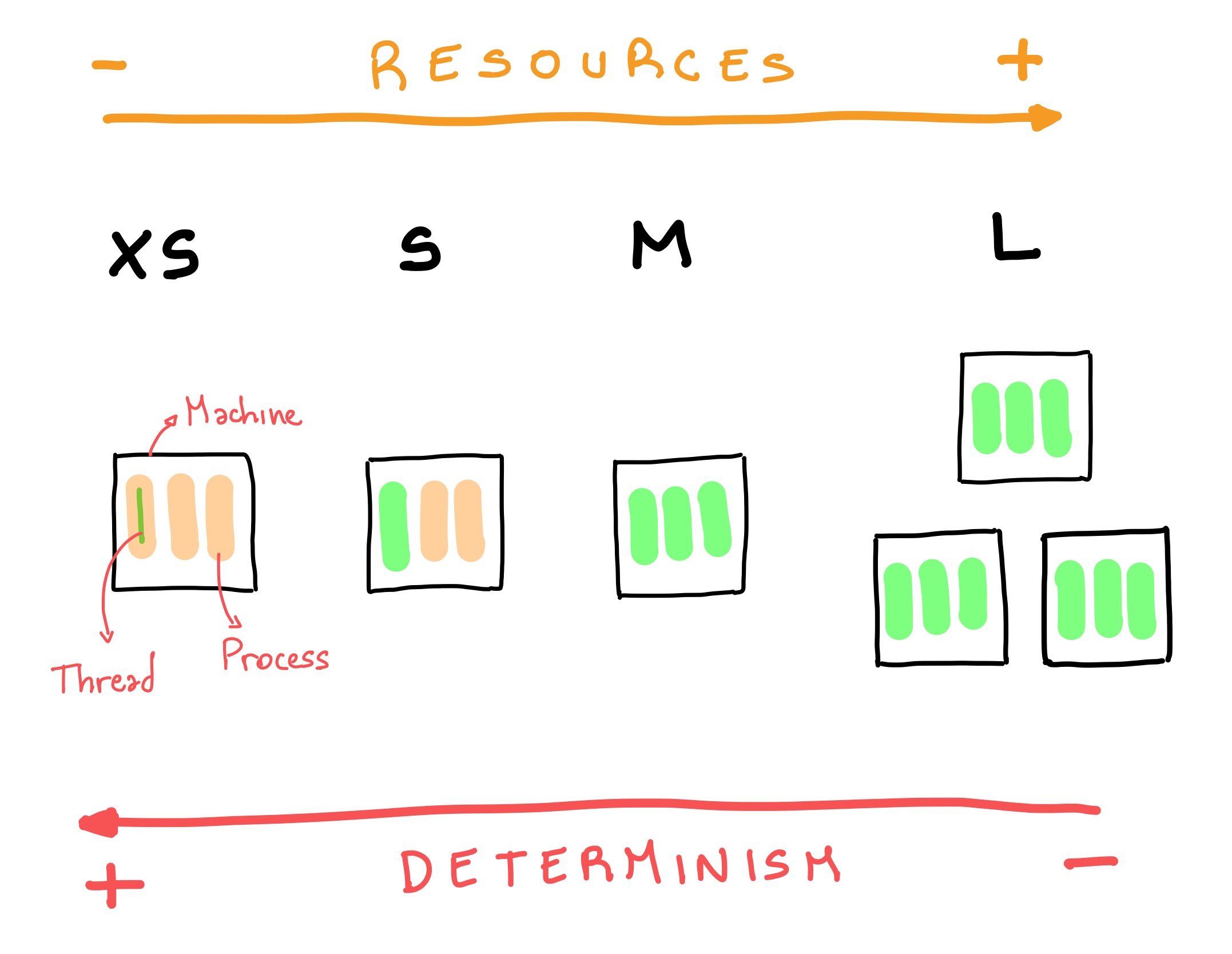

At Google they came up with a new dimension: Test Size1. Tests are grouped in categories based on the resources a test needs to run (memory, processes, time, etc.).

- X-Small tests are limited to a single thread or coroutine. They are not allowed to sleep, do I/O operations, or make network calls.

- Small tests run on a single process. All other X-Small restrictions still apply.

- Medium tests are confined to a single machine. Can’t make network calls to anywhere other than

localhost. - Large tests can span multiple machines. They’re allowed to do everything.

Scope and Size are related, but independent. You could have an end-to-end test of a CLI tool that runs in a single process.

How does this tie back to our crusade against flaky tests? Simple, the smaller the test, the more deterministic it’ll be. As a bonus perk, they also tend to be faster.

Google went the extra mile and built infrastructure to enforce these constraints. For example, a test marked as Small would fail if it tried to do I/O.

How to make your test small

Some ways you can reduce the size of your test:

- Use Test Doubles to avoid making calls to external services.

- Use an in-memory Database.

- Use an in-memory filesystem.

- Design your classes so that test can provide a custom time source instead of relying on the system clock.

- Use kotlinx-coroutines-test to virtually advance time without having to make your test wait.

- Use Testcontainers to turn a Large test into a Medium one.

The trade-off

The downside of artificially isolating your tests is that they lose Fidelity. Meaning, what you end up testing is further away from what will run in production. I’ve been bitten by this in the past.

The trick is to have a test distribution similar to the one proposed by the Test Pyramid. We should have lots of Small and X-Small tests, some Medium tests, and only a few Large tests.

This post is part of the Effective Testing Series.

-

The name is unfortunate as it’s not immediately obvious what Size refers to.↩